FUN2MODEL Research Themes:

Robustness Guarantees for Bayesian Neural Networks

« Overview

Probabilistic safety and reachability for Bayesian neural networks.

Since adversarial examples are arguably intuitively related to uncertainty, Bayesian neural networks (BNNs), i.e., neural networks with a probability distribution placed over their weights and biases, have the potential to provide stronger robustness properties. BNNs also enable principled evaluation of model uncertainty, which can be taken into account at prediction time to enable safe decision making. We study probabilistic safety for BNNs, defined as the probability that for all points in a given input set the prediction of the BNN is in a specified safe output set. In adversarial settings, this translates into computing the probability that adversarial perturbations of an input result in small variations in the BNN output, which represents a probabilistic variant of local robustness for deterministic neural networks.

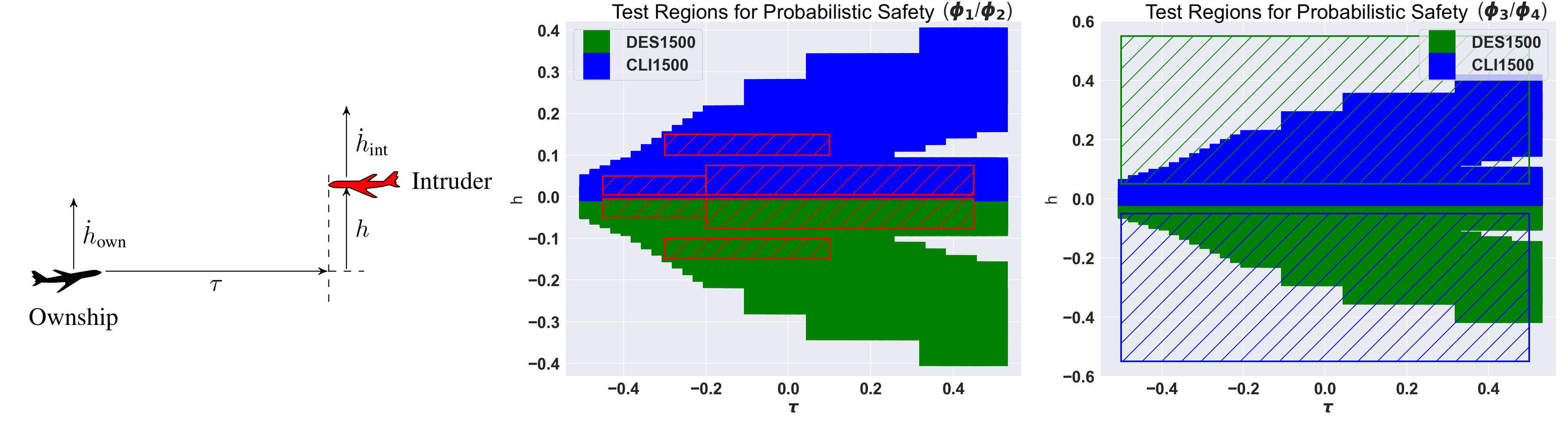

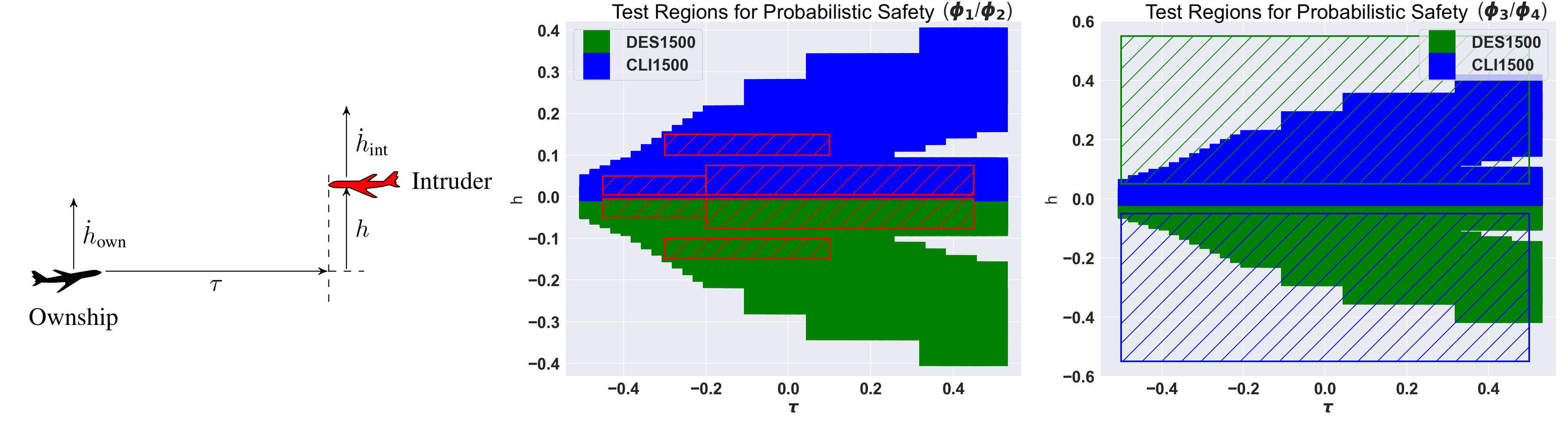

We propose a framework based on relaxation techniques from non-convex optimisation (interval and linear bound propagation) for the analysis of probabilistic safety for BNNs with general activation functions and multiple hidden layers. We evaluate the methods on the VCAS autonomous aircraft controller. The image shows the geometry of VCAS (left), visualisation of ground truth labels (centre) and the computed safe regions (right).

Certified training for Bayesian neural networks.

We develop the first principled framework for adversarial training of Bayesian neural networks (BNNs) with certifiable guarantees, enabling applications in safety-critical contexts. We rely on techniques from constraint relaxation of nonconvex optimisation problems and modify the standard cross-entropy error model to enforce posterior robustness to worst-case perturbations in ϵ-balls around input points.

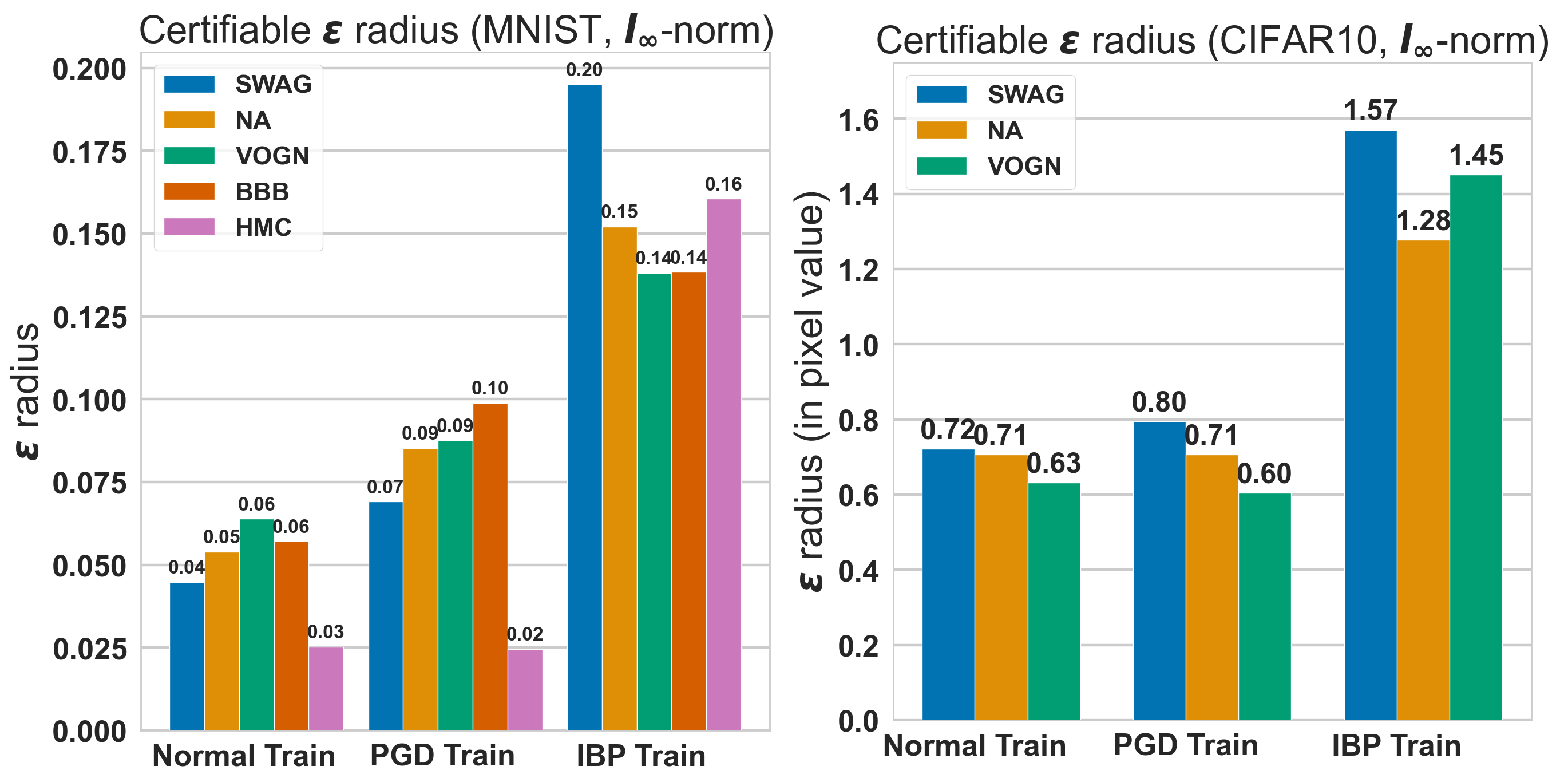

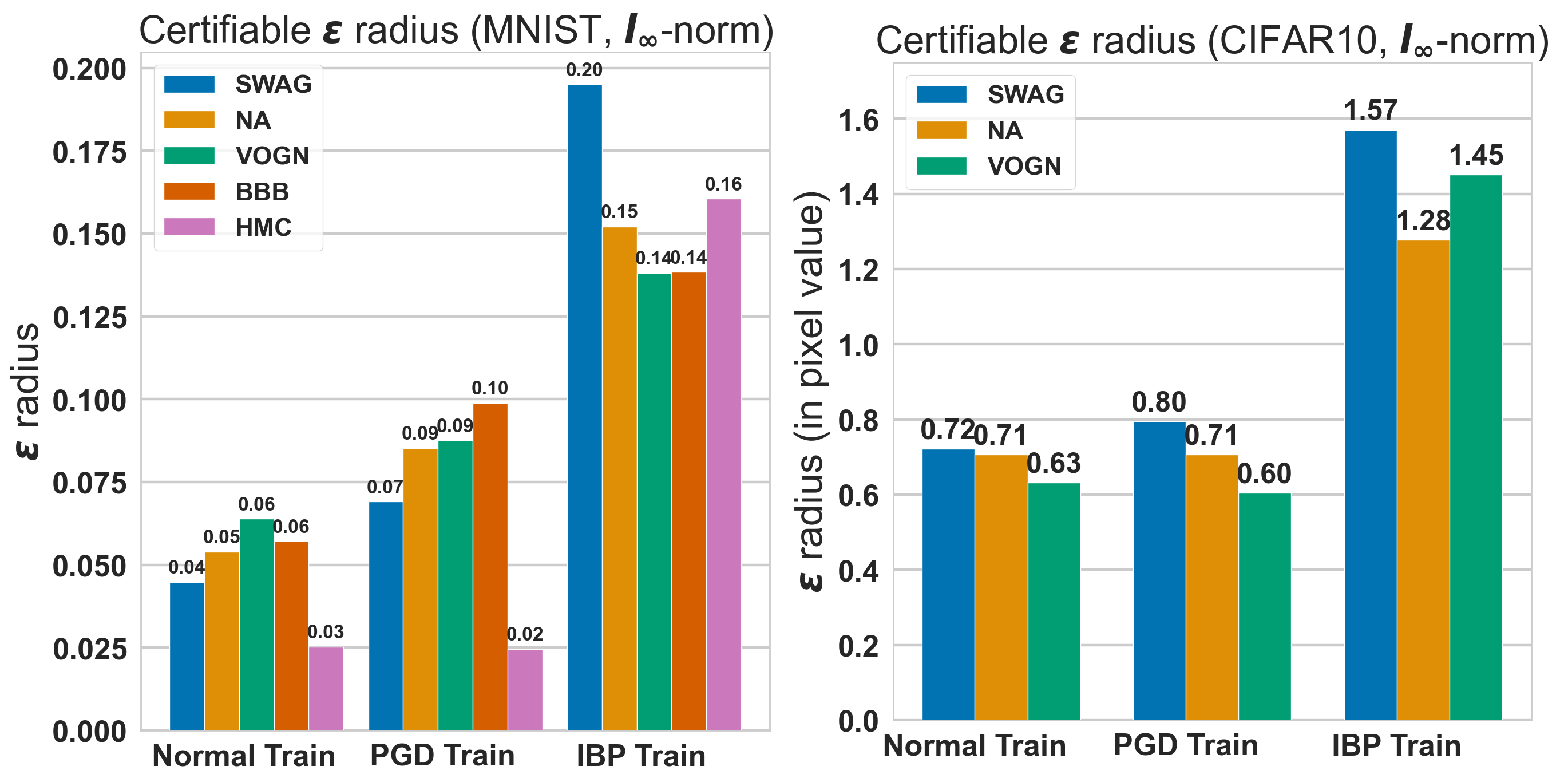

The plot shows the average certified radius for images from MNIST (right), and CIFAR-10 (left) using CNN-Cert. We observe that robust training with IBP (Interval Bound Propagation) roughly doubles the maximum verifiable radius compared with standard training and that obtained by

training on PGD adversarial examples.

To know more about these models and analysis techniques, follow the links below.

Software:

DeepBayes

Sort by: date, type, title

9 publications:

-

[WPL+24]

Matthew Wicker, Andrea Patane, Luca Laurenti, Marta Kwiatkowska.

Adversarial Robustness Certification for Bayesian Neural Networks.

In Proc. 26th International Symposium on Formal Methods (FM'24 invited paper), Springer. To appear.

2024.

[pdf]

[bib]

https://arxiv.org/abs/2306.13614

-

[Wic22]

Matthew Wicker.

Adversarial Robustness of Bayesian Neural Networks.

Ph.D. thesis, Department of Computer Science, University of Oxford.

2022.

[pdf]

[bib]

-

[WLP+21]

Matthew Wicker, Luca Laurenti, Andrea Patane, Zhuotong Chen, Zheng Zhang and Marta Kwiatkowska.

Bayesian Inference with Certifiable Adversarial Robustness.

In International Conference on Artificial Intelligence and Statistics (AISTATS'21), PMLR.

April 2021.

[pdf]

[bib]

-

[WLP+21a]

Matthew Wicker, Luca Laurenti, Andrea Patane, Nicola Paoletti, Alessandro Abate and Marta Kwiatkowska.

Certification of Iterative Predictions in Bayesian Neural Networks.

In 37th Conference on Uncertainty in Artificial Intelligence (UAI'21).

May 2021.

[pdf]

[bib]

-

[Fal22]

Rhiannon Falconmore.

On the Role of Explainability and Uncertainty in Ensuring Safety of AI Applications.

Ph.D. thesis, Department of Computer Science, University of Oxford.

2022.

[pdf]

[bib]

-

[WLPK20]

Matthew Wicker, Luca Laurenti, Andrea Patane and Marta Kwiatkowska.

Probabilistic Safety for Bayesian Neural Networks.

In 36th Conference on Uncertainty in Artificial Intelligence (UAI'20), PMLR.

August 2020.

[pdf]

[bib]

-

[ZWG+25]

Xiyue Zhang, Zifan Wang, Yulong Gao, Licio Romao, Alessandro Abate, Marta Kwiatkowska.

Risk-Averse Certification of Bayesian Neural Networks.

Technical report , arXiv:2411.19729 . Paper under submission.

2025.

[pdf]

[bib]

-

[Kwi22]

Marta Kwiatkowska.

Robustness Guarantees for Bayesian Neural Networks.

In Proc. 19th International Conference on Quantitative Evaluation of SysTems (QEST 2022).

2022.

[pdf]

[bib]

-

[VSLK24]

Jon Vadillo, Roberto Santana, Jose A. Lozano, Marta Kwiatkowska.

Uncertainty-Aware Explanations Through Probabilistic Self-Explainable Neural Networks.

Technical report , arXiv:2403.13740 . Paper under submission.

2024.

[pdf]

[bib]

https://arxiv.org/abs/2403.13740

Sort by: date, type, title

« Overview